Fake Job Scams

Fake Job Scams are a specific type of authorized fraud (scam). Authorized fraud happens when the person initiating the transaction is the legitimate...

They lure their targets with an urgent plea for help, often framed around a lost or damaged phone, and request money for an alleged emergency. Through this emotionally charged and highly believable narrative, scammers exploit parental concern and trust, coercing individuals into transferring funds under false pretenses.

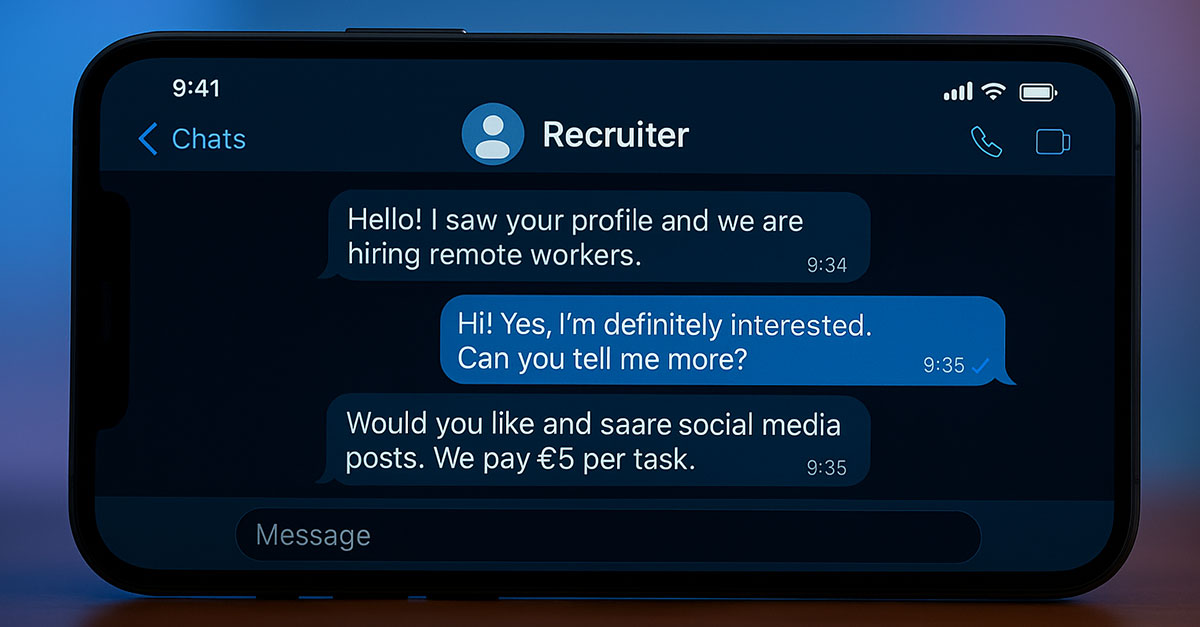

This type of scam is a relatively new variation of impersonation and social engineering scams, which have existed for decades. However, it gained significant traction globally around 2022–2023, evolving rapidly with the increased use of encrypted messaging apps and the heightened emotional vulnerability exploited during and after the COVID-19 pandemic. These scams usually begin with a WhatsApp or SMS message:

Scammers quickly adapted these messages to suit different family roles (“Hi Mum”, “Hi Dad”, “Hey Gran”) depending on the target.

The foundation of the “Hi Dad” scam lies in classic social engineering tactics where criminals manipulate people into performing actions or divulging confidential information by exploiting trust, fear, or other emotions. Similar scams have been around since the email-based “Nigerian Prince” scams of the 1990s and 2000s, which also relied on emotional triggers and urgency.

Between 2021 and 2024, these scams experienced a significant global increase. However, only a small fraction of the actual losses are reported by parents. The available data offers a glimpse into the visible impact, though the majority of incidents remain unreported:

As these scams become increasingly prevalent and harmful, affecting parents, banks, and society at large, it is important to understand how they operate. Below is an overview of the typical steps involved in a “Hi Dad” scam:

So why have "Hi Dad” scams become so prevalent?

What follows is an analysis of "Hi Dad” scams, evaluated across four critical dimensions using a 0–10 scale (0 = very low, 10 = very high):

The “Hi Dad” scam requires minimal resources to initiate. Scammers typically only need:

No infrastructure, technical skill, or capital investment is needed beyond what is freely or cheaply available online.

Scammers operate from jurisdictions with weak cybercrime enforcement, often use encrypted instant messaging apps, and quickly delete or abandon accounts post-scam. The lack of physical presence and anonymity makes prosecution difficult. However, increasing awareness and better reporting systems have marginally increased their exposure risk.

Factors contributing to exposure:

Risk triggers:

This scam relies on emotional manipulation, which can be highly effective, but only if the victim has children and is not cautious. Many potential victims become suspicious or try to call the number. Success hinges on:

Estimated conversion rates: 1 to 5% per batch of 100 messages sent.

Limitations:

In jurisdictions where Verification of Payee (VoP) is implemented, success rates for this type of scam may be higher but decline over time, as threat actors adapt and develop new fraud strategies designed to circumvent VoP controls.

Even a single successful scam can generate hundreds or thousands of dollars, often from small initial outlays. Some victims pay €200 to €1,000 without hesitation. Given the low cost of execution and scalability (mass messaging), the ROI is among the highest in low-effort digital fraud.

ROI Example:

Scalability: With automation and AI, scammers can target thousands at once, compounding the return.

| Category | Score (/10) | Key Insights | |

| 1 | Initial Investment |  Low to Moderate · 4/10 |

Minimal setup. |

| 2 | Exposure Risk |

|

Operates anonymously. |

| 3 | Success Rate |  Moderate to High · 7/10 |

Moderate. |

| 4 | Return on Investment |  Moderate to High · 7/10 |

High |

The “Hi Dad” scam is dangerously effective, not because it is sophisticated, but because it is emotionally manipulative, easy to execute, and difficult to trace. Its high return and low barrier to entry mean it will likely remain a favored technique among scammers, especially as they adapt scripts for different demographics and platforms.

Here are some tips on things parents can do to protect themselves against the “Hi Dad” scam.

Considering "Hi Dad” Scams from the perspective of financial institutions and their ability to safeguard both themselves and their customers, several major challenges emerge that need to be addressed. Here are a few:

To effectively combat emerging scams such as the “Hi Dad”, financial institutions must prioritize account classification and categorization of both internal and external accounts. This enables the early detection of subtle behavioral changes that may signal emerging threats. A continuous, dynamic assessment of account activity is essential, even when indicators are weak or fragmented.

This effort should extend beyond a bank’s internal ecosystem to include accounts it interacts with externally. Acoru facilitates this by tracking and categorizing every account connected to an internal one, regardless of the originating institution. This broader visibility helps uncover unusual patterns early and supports a more robust risk monitoring framework.

Equally important is the need for institutions to responsibly dismantle information silos and begin sharing intelligence on suspicious accounts in real time. Fraud schemes frequently span multiple banks, and a lack of coordination creates exploitable blind spots. By exchanging behavioral insights and threat indicators, institutions can build a more comprehensive picture of malicious activity, enabling faster and more accurate responses. Acoru supports this collaboration through a configurable approach that ensures privacy controls are upheld, allowing banks to define their own parameters while safeguarding sensitive information.

Here are some real-life “Hi Dad” examples that have been made public through traditional media in various regions. The real financial losses incurred by citizens through these types of scams can be much higher than what is currently being reported.

An Adelaide father, Andreas Flenche, lost $16,000 after receiving a text from someone posing as his daughter, claiming her phone was damaged, and she needed money urgently. He transferred the funds before realizing it was a scam. ANZ Bank blocked a final $5,000, but most of the money was lost.

An 83-year-old woman named Jo from the UK lost £47,000 to a “Hi Mum” impersonation scam while undergoing cancer treatment. A scammer posing as her son sent a text claiming his phone number had changed and gradually convinced her to transfer large sums to cover urgent bills. Trusting the messages and wishing to help, Jo never questioned the requests and avoided contacting her real son out of concern for his supposed struggles. Despite being flagged as a vulnerable customer, her bank approved multiple transactions without intervention, later admitting a failure in safeguarding. Jo was eventually refunded in full, but the emotional toll on her and her son was severe. This case highlights how emotionally manipulative and high-impact these scams can be, particularly against elderly and isolated individuals.

In September 2024, Frank Shooster, a 70-year-old retired attorney in Florida, nearly lost $35,000 after scammers used AI-generated voice cloning to impersonate his son, Jay Shooster, a political candidate. The fake “Jay” called claiming he’d been in a car crash, arrested, and needed urgent bail. A second scammer, posing as a lawyer, demanded payment via a cryptocurrency ATM, raising Frank’s suspicions. The scam was nearly successful, as the cloned voice was highly convincing (likely extracted from Jay’s 15-second campaign ad). Frank only avoided the loss after his daughter uncovered the deception. Now, Jay is pushing for AI regulation, calling for voice authentication, liability for misuse, and watermarking of synthetic content to prevent future abuse.

These real-life cases demonstrate the evolving sophistication and emotional manipulation at the core of “Hi Dad” - style scams. Whether through simple text impersonation or advanced AI voice cloning, scammers are adept at exploiting moments of vulnerability, familial trust, and urgency to defraud victims of substantial sums. The financial and psychological impact on individuals, especially the elderly and emotionally engaged, can be profound.

While the reported figures are already significant, they likely underrepresent the true scale of the problem, as many victims never come forward due to embarrassment or lack of awareness. These examples underscore the urgent need for greater public awareness, stronger safeguards from financial institutions, and clear regulatory frameworks for emerging technologies like AI. Without these measures, such scams will only become more frequent and damaging.

As “Hi Dad” scams continue to evolve in both technique and reach, their impact will likely grow, driven by low execution costs, weak detection mechanisms, and the increasing sophistication of impersonation tools, including AI-generated voice cloning. Going forward, this scam type poses a persistent and scalable threat, especially to emotionally vulnerable individuals such as parents and the elderly.

For individuals, the key will be ongoing education and vigilance. Public awareness campaigns must go beyond simple warnings to include practical verification methods and emotional literacy, empowering people to pause, question, and verify even the most urgent and intimate messages.

For financial institutions, detecting scams in which the legitimate account holder authorizes the transaction is a formidable challenge. In such cases, traditional detection signals—such as device ID, network, location, or behavioral patterns—are almost useless. To adapt, banks and other financial platforms will need to invest in robust account classification, contextual transaction analysis, and cross-channel behavioral monitoring, enabling them to minimize the impact on legitimate activity as much as possible. Additionally, upcoming regulations will require financial institutions to reimburse customers in nearly all such cases, increasing the need to identify these situations proactively to avoid refunds and chargebacks.

For regulators and technology providers, this means acting swiftly to address the risks posed by emerging technologies. Voice authentication protocols, AI usage transparency, and digital content watermarking will be crucial to staying ahead of scammers who are increasingly using generative tools to deceive.

In short, the path forward demands a multi-pronged response: technological, regulatory, institutional, and educational. Only with collaborative safeguards across sectors can we hope to contain the growth and emotional fallout of this highly manipulative form of digital fraud.

Fake Job Scams are a specific type of authorized fraud (scam). Authorized fraud happens when the person initiating the transaction is the legitimate...

Acoru, a pioneering cybersecurity firm in the fraud and scam detection space, has officially launched its operations following a successful period in...